|

PTMs are a

simple representation and file format that allow lighting direction

and material properties to be controlled interactively. This is

especially useful for seeing subtle shape detail on the surface of

objects. PTMs are produced from a set of images taken with varying

lighting direction. There are two general approaches to making PTMs:

1. Highlight-based

PTMs:Place one or two red or black balls (snooker balls work well) next

to the object you are photographing. Take multiple pictures of the

object and ball(s) with a digital camera set on a tripod, triggered

by a handheld flash. Move the handheld flash to various positions

keeping it at fixed distance to the object. Allow the flash to

trigger the camera, taking multiple images with different lighting

directions. Put all the images into a directory, and choose that

directory within the

HighlighPTMbuilder tool. This tool will

automatically find the ball(s) in the images, recover the light

direction and build a PTM from this dataset.

2. Manually

built a .lp (light position) file

: With a text editor that tells the

PTMfitter where the

image files and lighting directions are. You can use any number of

aids to collect the images under know lighting direction, some

methods we and others have employed are shown below.

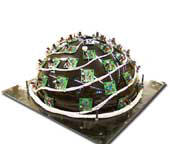

Our original

dome was made out of hot-melt glue and wooden dowels in the shape of

a subdivided icosahedron. A tripod holds a camera looking down from

directly above and a handheld lamp is sequentially moved to each

triangular face to collect 40 images. Images were taken at night to

avoid ambient illumination.

Although

somewhat complex, this automated dome collects 50 images under

varying lighting without manual intervention. It incorporates 50

custom-made flash boards under hardware control. A digital camera

mounted on top is controlled by a laptop, where images are

immediately downloaded to after they are taken. Construction by Bill Ambrisco and Eric Montgomery.

This ‘light-arm’ was designed and constructed by Bill

Ambrisco and uses 12 commercial photographic flashes on a manually

rotated arm. A separate tripod holds the digital camera, avoiding

mechanical shake. It views the object or scene through the races

that provide the pivot for the arm. This device was used by the

National Gallery in London to collect PTMs.

This portable

device was constructed for John Yoshida at California Department

of Justice for collecting PTMs of footprints. Mike Cavallo did the

electrical design and software. The system uses flash

bulbs as the illumination source and the arm is manually rotated to

vary incident lighting direction in one axis.

Wouter Verhesen

built this very inexpensive PTM setup in the Netherlands. A used

point-and-shoot digital camera was modified to extend the flash

unit. It is moved to various cutouts in a Styrofoam hemisphere to

vary lighting direction. Wouter Verhesen

built this very inexpensive PTM setup in the Netherlands. A used

point-and-shoot digital camera was modified to extend the flash

unit. It is moved to various cutouts in a Styrofoam hemisphere to

vary lighting direction.

Cultural

Heritage Imaging

has built a PTM dome that allows careful control of light

source color spectra in addition to lighting direction. Light is

filtered at the illumination source and brought into the dome with

fiber optic cables. This permits elimination of unnecessary and/or

damaging light wavelengths, which makes imaging of light sensitive

materials possible. For example, the CHI dome has been used to image

fragile wax and lead seals attached with string to medieval

documents, and oil paintings. Multi-spectral imaging has also been

done using this equipment. Cultural

Heritage Imaging

has built a PTM dome that allows careful control of light

source color spectra in addition to lighting direction. Light is

filtered at the illumination source and brought into the dome with

fiber optic cables. This permits elimination of unnecessary and/or

damaging light wavelengths, which makes imaging of light sensitive

materials possible. For example, the CHI dome has been used to image

fragile wax and lead seals attached with string to medieval

documents, and oil paintings. Multi-spectral imaging has also been

done using this equipment.

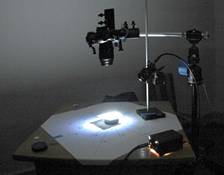

Cultural

Heritage Imaging

has acquired PTM images for using a the simple photographic assembly

shown to the left. A digital camera is fixed to provide an overhead

view of an object. The object rests on a template that directs the

photographer to place a light source at controlled position in x,y,z.

Although the acquisition sequence takes some time, it requires

minimal hardware. Cultural

Heritage Imaging

has acquired PTM images for using a the simple photographic assembly

shown to the left. A digital camera is fixed to provide an overhead

view of an object. The object rests on a template that directs the

photographer to place a light source at controlled position in x,y,z.

Although the acquisition sequence takes some time, it requires

minimal hardware.

We

have built a real-time system that flashes 8 high intensity, white,

L.E.D.s

(Luxeon Vstars) at

500 f/second while a synchronized high speed video camera captures

frames and feeds the images to a GPU. Normals vectors are computed

at 60 f/sec

and used to display objects with

transformed reflectance functions

in real time. The EGSR paper is available

here We

have built a real-time system that flashes 8 high intensity, white,

L.E.D.s

(Luxeon Vstars) at

500 f/second while a synchronized high speed video camera captures

frames and feeds the images to a GPU. Normals vectors are computed

at 60 f/sec

and used to display objects with

transformed reflectance functions

in real time. The EGSR paper is available

here

Paul Debevec’s group

at USC has also built a series of ‘light stages’ for collecting

images and video of actors under varying lighting. |