The Client Utility project at HP Labs was started to explore ideas needed to build a scalable, secure, and manageable peer-to-peer computing environment. The results of that research formed the basis for e-speak, an open source project that HP also released as a fully supported product. This report is the most complete description of the Client Utility architecture at the time the research work was transferred to the newly formed Open Services (soon to be renamed E-speak) Operation. It is reproduced here for its historical interest, showing the thinking that led to e-speak and the concept of e-services.

* Present address Transmeta Corporation, Santa Clara, CA

Before there was e-speak1, there was the Client Utility project at HP Labs. Work began in late 1995, and a first prototype was ready by March 1996. That prototype was refined over the next 6 months, and a set of use cases were put together to demonstrate the vision. Once a commitment to go beyond the prototype stage was made by HP management, the entire system was rearchitected based on what we had learned from the prototype. Eventually, these ideas were implemented as the first prototype of e-speak and announced to the world in May 1999. The motivation for, and ideas behind, e-speak have been described elsewhere2.

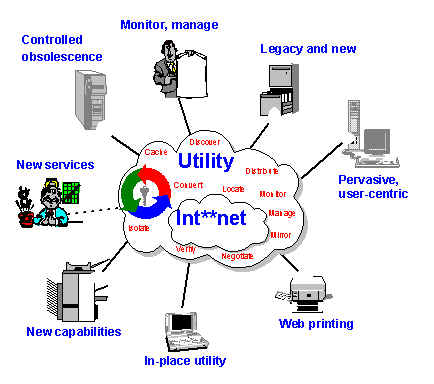

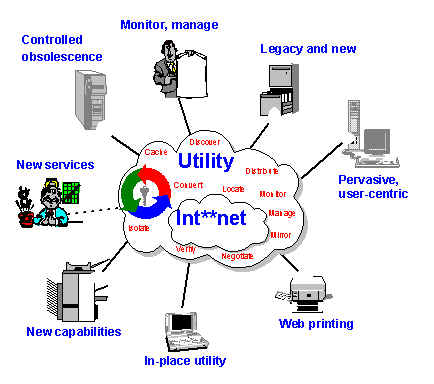

As you will see from reading this document, the architecture describes a peer-to-peer system, one of the earliest to be based on this concept. One of the other ideas that form the basis of the architecture is that everything can be thought of as a service, leading to the e-services vision introduced by HP and that has recently captured the imagination of the computing community as web services. The first figure, which we used as the logo for the project, shows examples of many of the features we were designing for. Today, we see the same features listed for peer-to-peer infrastructures and web services environments.

There are three principal ways that the Client Utility architecture anticipated the environment needed for peer-to-peer computing. The first was treating everything as a service or resource that could be linked into a dynamically composed value chain instead of using a specific web service from a client applet. The second was the need for a flexible way of describing services and a powerful means of finding them, vocabularies as discoverable resources. The third was recognizing the need for advertising services so that those looking for services could find them, even without knowing ahead of time who might be providing them.

As with many research projects, the Client Utility was rich in ideas but weak in documentation. This report is the most complete specification of the architecture. Most of the ideas were present in the open source releases through December 1999, although some pieces were left for later implementation. After that, the product was targeted at the B2B portal space, and substantive changes were made. However, many of the concepts presented here survived.

Since this document is to be treated as a historical document, only minor changes were made in producing this report. For example, the "Confidential" label was removed and dead web links were replaced with explicit references. No attempt has been made to finish any of the incomplete parts of the architecture or to update them with what we learned during the implementation. In particular, the issues of specifying security policy and interfacing to management systems are particularly weak. The failure limitation work is still a subject of active research. What follows is the document in its original form.

2.

"E-speak E-xplained", HP Labs Tech report HPL-2000-101.

Why the world needs the Client Utility

Today at our homes and work we do not think about power, how it is generated or where it comes from. We simply plug into the wall and use the facilities provided by our local power utility company. Client Utility computing intends to create a paradigm for end-user computing where users simply plug into the wall and use the facilities, services and applications provided by their local utility. This form of computing is realized by a middleware infrastructure that facilitates ubiquitous/pervasive computing.

For information technology to become truly pervasive, it must transcend being merely manufacturable and commonplace. It must become intuitively accessible to ordinary people and must deliver sufficient value to justify the large investment needed in the supporting computation infrastructure. The consumer's expectations from the computation infrastructure or utility will be substantial. Just as people pick up a phone and expect a dial tone, so too will people expect the infrastructure to be available, ready and waiting.

In a high bandwidth, digital, multimedia world -- in which clients (general-purpose computers or appliances) connect to a utility - people could pay for their computing by usage, modulated by guaranteed response requirements. This enormous paradigm shift, changes what is now a capital investment into a competitive service, like electricity and water. It will do for computing what the Web did for data. It is not the ultimate revenge of time-sharing, which was proprietary, fixed resource, and usually location-dependent. In this form of computing, called Client-Utility Computing, standards-based open resources, located arbitrarily, are combined as needed for a particular job. It will no longer matter where the computers operate or which manufacturer makes them.

The suicidal obsolescence schedule of today will be replaced by the

capacity requirements of the service provider, with upgrades

undetectable by the end-user within a given performance envelope. The

trend towards such utilities is likely, since the end-user benefits

from greatly decreased cost of ownership, the manufacturers gain a

relaxation of insane time-to-market demands, and a new lucrative

service industry should emerge. If this new model of computing

becomes practical and reliable, history tells us that current

manufacturers who do not adjust in time will not be able to satisfy

customers and will suffer dire consequences.

Client-Utility enables ubiquitous services over the Int**net (where

"**" is "er" or "ra") - making existing

resources such as files, printers, Java objects, and legacy

applications, or for that matter any software or hardware resource,

available as services. In this way, the Client Utility fundamentally

lowers the barriers for providers of new services.

The infrastructure provides the basic building blocks for service

creation, billing, management, and access - dynamic discovery of new

providers and capabilities, security of access to resources and data,

negotiation, monitoring, and management of resources and data,

replication to maintain reliability, caching to enhance performance,

coherency and conversion of data, etc. These capabilities are

enabled transparently for both legacy and new applications, OSes, and

systems.

The architecture is built on a stringent security model and on a

small number of fundamental abstractions. These abstractions are

inherently extensible to allow for dynamic composition and

customization. The implementation protocols have been architected

specifically to scale cleanly from utilities that include a few

machines to one that includes all machines on the internet.

The Client Utility is built on a scalable inter-machine protocol

that seamlessly extends the resources available to applications to

include remote resources, such as disk space, compute cycles, and

database records, to name a few. There are a few important points to

note about the infrastructure. First, it is a peer-to-peer protocol

architected specifically for the kind of scalability required by the

Internet and Web environments of today. All basic interactions in

the infrastructure involve two individual machines at a time; there is

no notion of multicasts or broadcasts in the underlying architecture,

although these mechanisms can be implemented above it.

Second, the infrastructure reifies all resource accesses, thus

making resource access protocols of any kind, such as file accesses or

bank transactions, highly extensible. In short, resource access

protocols become programmable entities that can be extended to add

features of manageability, reliability, replication, accounting,

payment information and the like, without in most cases affecting

clients or resources. Third, the infrastructure does not prescribe

the rewriting of applications to be beneficial in legacy environments.

Applications such as Lotus123 or Microsoft Word seamlessly benefit

from the infrastructure as long as Client Utility emulation has been

integrated into the run-time environment. Fourth, the infrastructure

acts in conjunction with existing operating environments and builds

upon them, rather than replacing or modifying them. In fact, it

builds the next level of abstractions above existing distributed

environments and protocols, such as DCOM, COSS, HTTP, and Java RMI.

Finally, a word about security. Security is a critical deciding

factor for any large scale distributed system, especially one that can

have scale of the Internet. Here, malicious hackers, inadvertent

configuration and implementation errors are the norm, not the

exception. Issues of scale dictate that a single security policy for

all users, dependence upon physical security of machines or

centralized information banks are simply not viable solutions. The

Client Utility infrastructure ensures that each machine or user in a

utility can have a different security policy, without allowing single

machine security breaks to affect the security of the entire system.

Client Utility computing is the form of end user computing enabled

by this infrastructure. In this form of computing, users have

competitive but seamless access to distributed resources. Access to

resources can be modulated by the user's requirements of cost,

performance or functionality. No longer is a user tied to only

resources on his/her machine or have to bend over backwards (thanks to

the highly non-intuitive nature of existing distributed resource

access protocols) to get access to resources from elsewhere.

Resource access does not mean owning the machine, configuring the

resource (such as through file system mounting), or even managing the

resource itself. All these activities can be automated and

outsourced, thus opening up the possibilities for new types of service

industries. It is important to note that such a paradigm does not

require the users to give up control of their machines or have to

compromise on the safety of their data. This fundamental change in

end-user computing allows for the creation of paradigms where the

users deal with computing and computing systems much like they deal

with power utilities and paper. In short, users may pay for it and

use it is as an integral part of their daily lives, but in general

they do not notice or pay attention to its existence.

Gives examples of how the Client Utility changes the way people use computers.

1.2. Enabling Infrastructure

1.3. Scenarios

1.3.1. Introduction to Scenarios

Client Utility computing makes possible many different scenarios which would otherwise only stay on our technology wish list. Many of these scenarios ameliorate existing ingrained problems, but quite a few of them open up the possibility of entirely new markets and businesses. In fact, there are few restrictions to the kind of utilities that may be built. For example, a printer manufacturer may want a printing utility to deploy and provide innovative printing solutions; a large enterprise such as a FedEx may want to deploy an enterprise utility to simplify (read lower the costs) of the management and administration of its IT infrastructure; a company like GM that needs large Web sites can significantly increase their flexibility and lower costs through a Web Utility and so on. The next few sections present utilities that could be of interest to various HP businesses and divisions.

A whole enterprise or simply parts of it may use a utility for the IT infrastructure. Users in an enterprise get simultaneous access to a myriad of applications from different operating systems, transparently through the utility. Type "winword" on a UNIX machine and the utility will find the application, run it on an appropriate machine and forward the display to the end user's machine, without any prompting. Incompatible file formats from different versions of software are seamlessly handled; the utility uses attribute matching to find and handle differences between file formats.

System administrators may reset and move storage around for best utilization of network disks. Thanks to the utility this does not affect active users or running applications. Rolling upgrades of operating systems and application software do not affect any users and applications in the enterprise; the utility virtualizes resource accesses so that name transformations, or application availability can be completely hidden. During peak processing requirements or heavy business seasons, compute resources from outside vendors are automatically and seamlessly brought on-line to ramp up capacity. In short, a utility environment in an enterprise drastically reduces the cost of ownership without rapid obsolescence or complete rewrite of application programs. Enterprise Intranets and systems work as they are supposed to - smoothly - not as a disjoint system requiring immense amounts of caring and feeding.

Internet service providers can change the very model of service that they provide to their users. ISPs provide users with a virtual personal computer. This customizable personal computer may contain applications from any number of different systems and the users may configure the environment, directories as they choose. No matter where in the world the users log in from they see the same set of files, directories, preferences, and resources. The ISP company dynamically maps this virtual personal computer to resources on a large number of different machines - changing this mapping as required by system loads, user location or network bandwidth.

None of this mapping and remapping affect the active users or running applications. So, a user may start a large computation on his virtual personal computer and fly to the next state, login and check on progress of his computation. Naive users do not have to worry about installing applications or configuring them. They simply boot their machines and use a pre-configured virtual computer provided seamlessly by their ISP utility. At the end of the month, users pay one additional bill - to their ISP utility. Simply, put none of this service model would be technologically feasible without having a utility infrastructure.

ISPs can also manage their plethora of machines more easily via the management infrastructure provided by the Client Utility. Centralized management is provided by the same mechanisms used to provide transparent access to users. All that is needed is to give the administrator a different set of permissions than those given other users. In addition, a hacker breaking into one of the ISP's machines will have a difficult time compromising more of the system.

Print Utilities extend the notion of what a printer can do, how tightly it may be integrated with other compute resources, and allows printer manufacturers to think beyond the printer hardware to alternate commercializable print solutions. A large rendering job that reaches a printer is automatically forwarded by the Print Utility to the nearest machine with adequate horsepower. Such an operation cannot be implemented simply through remote management of printers but needs a utility-like infrastructure to allow the printer to locate seamlessly remote compute cycles and subsequently access them.

A new non-standard format document reaches a printer, which upon not recognizing it uses the utility to find a resource on the Internet and to render automatically the document to a recognizable format. Travelers in an airport walk up to kiosk printers and identify themselves through a swipe of their smart credit-card. The printer utility contacts the traveler's home machine automatically, extracts all urgent email messages and displays them on a small display attached to the kiosk printer. The traveler points to one or more items and they are automatically printed out; charges being automatically sent to the traveler's credit-card. Here the print utility enables an entirely new business opportunity for printer manufacturers and resellers.

In a similar vein, a travelling executive could walk up to an Ethernet tap or phone line in an offsite office and hook up his portable. He opens up an appropriate document, suggests the kind of printing desired and prints it - without having the appropriate drivers or having to configure the appropriate printer queues. Here the utility locates the machine with the appropriate drivers, send over the document to it. That machine in turn locates the nearest appropriate printer and sends the print job to it.

Utilities that allow for the on-line representation of business processes, inter-company financial transactions and other Internet services are very much in the realm of short term possibilities. Of course, many companies are marketing business management solutions, but each is a point solution to a specific problem. The Client Utility leads to an environment where such solutions are constructed by composing a number of services instead of writing a specific program. It is the Client Utility infrastructure that makes such compositions feasible.

The change is significant. When in need of parts, a computer manufacturer's inventory management program puts out an on-line request for bids with appropriate specifications for both the parts as well as the supplier businesses. Supplier businesses with the required credentials (supplied by an online certification authority) bid for the part dynamically. The utility ensures that the bids are matched with what the supplier companies claim to provide, uses an on-line negotiation service to provide a fair price for both. Finally, the manufacturer's inventory management client contacts an online representation of a packaging service through the utility and arranges to have the parts delivered. Negotiation for services, contract maintenance, and payment support can be provided as available services, while the matching and mapping of bids and requirements, deployment of services, and real-time location of appropriate services is done by the utility itself.

This sample is only one possibility in the realm of commercial services utilities. It is also possible to use the same model to create new software businesses. A deployer of a new service in a commercial services (e-commerce) utility need only describe the components needed by the new service. If these components are provided by other providers in the Client Utility, the workflow completely specifies the service. When a customer request comes in, the service provider gets bids from its providers of the individual components, selects the appropriate ones to do the work, and returns the final result to the customer. In this way a software service is no different than a build to order computer made up from parts delivered by a just-in-time inventory management system.

The Client Utilty provides the infrastructure the provider needs to make money from the service; this includes service specification, dynamic installation and execution as a result of client accesses, and security. In each of the steps the utility plays a significant role. In the service specification step, the new service may use one or more pre-existing utility services; the utility here provides the basis for thinking and reasoning about value-added services. The glue for the installation step is the utility infrastructure's APIs, which automatically subsume advertising and informing clients if necessary. During the execution step, the utility actually acts as a composition service allowing for the interposition of payment and contract maintenance options, as well as allowing the new service to easily access/use pre-existing services. In every step of the process, the Client Utility protects the integrity of all the participants.

Large, complex websites of today can be reorganized into highly manageable web utilities. An image processing request comes into a large web site; the utility automatically finds the least loaded machine and runs the image processing application. In fact, if the requestor of the processing needs a print out, the utility could find an appropriate printer geographically near the requestor (say at the nearest Kinkos) and automatically send it there after informing the requestor.

As requests increase beyond a certain threshold, the utility automatically moves the overflow to the computer center utility. To enable this the administrator simply had to modify the capacity constraints at the utility management console for the website. Adding machines, changing software, and failures are handled without affecting incoming requests. As machines come up and go down the utility ensures continuity and resource visibility. To support better availability for a particular set of files, the web-site administrator simply changes the replication policy at the utility management console. The utility ensures that the appropriate replication module gets interposed.

Compute center utilities rent out hardware to other commercial concerns in very novel ways. They do not rent out disks; they rent out disk space. Even more, they rent out file services such as version control, backups, etc. They do not rent out computers; they rent out compute cycles. Even more, they rent out reliable access to cycles by rolling work over to new machines as others get overloaded. They do not rent out memory simms; they rent out the use of RAM. Even more, they can rent out non-volatile storage for a fee.

A package handling company during Christmas season could automatically offload overflow computation to machines in the compute center utility. To the running applications that need these extra cycles, this is completely transparent. Due to a contract with the compute center, the package handling company's machines can seamlessly access the cycles on the compute center machines. Interestingly enough, if the compute center wants to ensure that the package handling company only uses 100,000,000 cycles/second and no more; it can do so through the utility infrastructure's fine-grained resource access checks.

Through similar mechanisms, small businesses can rent out out disk space with varying constraints, such as storage that is backed up once a week or remote storage access times less than 1ms, from a compute center utility. Such on-line outsourcing of hardware resources is not practical with current technology. In fact, a Client Utility can evolve from a conventional client-server environment. Here the existing infrastructure itself becomes a utility and finally links with an external computer center utility to get additional computation resources.

It is important to note that these utilities are not carved out

from a disparate and disjoint sets of technologies. In fact,

designing and constructing such utilities would be impossible without

a structured way of thinking about the technology for utility

infrastructures. By solving such problems as naming, security, and

location independence in the underlying infrastructure, the Client

Utility makes a large number of new approaches feasible.

Provides a brief overview of the technology and the key abstractions.

2. TECHNOLOGY OVERVIEW

2.1. Introduction to the Technology

At the core of our technology lies the ability to virtualize resource accesses on host systems (which could be appliances, workstations, or large servers) and map these accesses to the uniform resource abstraction presented by a Distributed Resource Interchange Protocol (DRIP). Virtualization of resource accesses allows for such characteristics as mobility, distribution, availability, manageability, security, and reliability to be added seamlessly to the resource being accessed, without affecting the client applications.

Virtualization paves the way for domain specific protocols such as file access interfaces and the X-protocol to be mapped to a generic interchange protocol like DRIP. The use of DRIP as a protocol creates a highly extensible software bus that can connect any set of resource provider-accessor pairs without extensive a priori arrangement. This software bus is programmable and may be associated with properties such as secure access, negotiation for services, attribute-based location of appropriate services and replication to name a few. This generic interchange protocol allows fine-grained manipulation of inter-module (or client-resource provider) interactions. It also forms the basis for creating higher level resource and management abstractions such as the virtual personal computer or the web management utility, to name a few.

An application may choose to be directly aware of this software bus or be completely unaware of it. In case it is aware of the Client Utility software bus, it can exercise fine-grained control over the properties associated with the software bus. On the other hand, an unaware application can still use the facilities provided by the software bus. However, control and management of the properties must be done through management APIs (interactive or programmable). The control of these properties allows an application to negotiate for the cheapest service, locate the nearest service, ensure that its data is safe, replicate the use of certain services to ensure reliability, etc. There is also a third possibility depending upon the success of such utility infrastructures. The infrastructure may be subsumed into the local operating system, thus extending the nature and functionality of operating systems. This merger is a possible future step, but in no means necessary for the use and success of the utility.

The Client Utility architecture is a framework that can be used to

describe, control, manage, and match the interaction between any

resource provider and a client of the resource. The resource provider

could be a virtual memory manager or a search engine - the principles

used in the framework still apply. The client could be an OS's

process management subsystem, a legacy NT application, a newly minted

Java applet or an Active-X control - the intention at least is to

provide appropriate mappings for most of the important categories of

applications. This framework not only acts a "software bus"

for controlling resource interactions but also acts as a substrate

that facilitates higher-level resource aggregations and management

abstractions, such as information utilities and Web-site control for

large distributed sites.

The implementation of the framework may be viewed as an internet

OS; it does for networked applications what standard OSs do for

standalone applications. Just like standard OSs, it provides

abstractions for building applications (files in standard OSs),

provides a core set of services for all networked

applications/services (comparable to memory management in standard

OSs) and a set of powerful support software that aids in realizing a

complete system (libc, login, and inetd in

standard OSs). It is important to note that this Internet OS does not

replace existing OSs such as NT, HP-UX or VxWorks, but instead acts in

conjunction to provide an inter-machine protocol that allows for the

creation of scalable ubiquitous services and interactions.

The Client Utility is based on just 5 fundamental abstractions.

The fact that so few abstractions are needed makes the system

manageable; the fact that so much can be done with them gives us

confidence that we have chosen wisely.

Resource: Fundamental abstraction that

may encapsulate any functionality or service that needs to be directly

accessed across protection, machine, or geographic boundaries.

Everything is a resource, be it a file or a complex combination of

services, which clients access by name. The Client Utility associates

meta-data with each resource. Access permissions and attribute-based

descriptions are built into the abstraction as opposed to being

disparate mechanisms.

Attribute-based specification:

Abstraction that is used for resource discovery and lookup. Clients

can easily specify the attributes and constraints for the resource

lookups in a large-scale distributed system through this abstraction.

They are not required to agree on names in order to work on resources,

making it easier to deal with heterogeneity in a dynamic environment.

Resource Proxy: Abstraction that

provides a contact point for managing and interacting with one or more

resources. Acts as the advertising and controlling agent for a set of

resources or services. Understands, or delegates responsibility for

understanding, resource semantics simplifying the Client Utility

implementation.

Client Interface: Client side

abstraction that acts on behalf of the clients to provide a range of

functionality such as error handling or dynamic compositions of

resource accesses. This abstraction is geared towards simplifying the

client program interactions in a highly componentized and distributed

system.

Intermachine Agents: Entities that

handle all inter-machine interactions. They implement the DRIP

protocol, act as proxies for clients on the other machine, and look

like local resource proxies to their machine, thereby hiding the

distributed infrastructure from the Client Utility resource management

implementation on a machine.

These abstractions dramatically simplify the model that

implementors have to think about when deploying or building clients or

services for a large scale distributed system. Also, they subsume a

large number of issues such as resource discovery, security matching,

caching, and error handling. Furthermore, the traditional ties that

clients have to services and resources have been made explicit so that

it is easier to implement extensions.

This approach allows for various ways of componentizing

applications and services. The kinds of functionality that had to be

built into clients or servers, such as conversion between two

incompatible interfaces, can now be provided as separate intermediary

services implemented by independent organizations. This degree of

componentizing significantly changes how we think of application

construction and service providers in distributed systems. All these

drastically lower the barrier to creating, deploying, and using

networked services in a large distributed environment - the

fundamental goal of a utility.

The core services subsume the kind of mapping, matching, and

interposition needed to facilitate differing requirements and

specifications of clients and services. This part of the

infrastructure is intended to be a white box that provides a small but

powerful set of services and can be configured/fine-tuned through

appropriate management APIs. The core services include matching,

mapping and binding of names, resource specific data such as

permission sets, and resource attributes. The services facilitate a

number of useful features.

Personalizable name spaces: Every

client or service in the utility has complete control over what its

name space looks like. Thus, to a client on an UNIX machine, Word97

could look like

The infrastructure does not mandate a global namespace, although

one could be created using the abstractions of the infrastructure. In

systems that could possibly have millions or billions of machines,

this degree of virtualization of names is necessary.

Attribute based resource lookups:

In large scale distributed systems, unlike single machine systems,

specific resource names are often not an appropriate way for finding

resources. In a single-machine system, knowing the name

/dev/fd0 is good enough to identify the exact resource.

However, the list of resources that provide the same service in a

large distributed systems is volatile and may be very large. Hence,

it is best to locate and find resources based upon descriptions of

what the client needs. The Client Utility infrastructure achieves

this end by supporting the resource abstraction mentioned above and

incorporating attribute-based and constraint-based searches when a

client binds a name to a resource.

Seamless interposition:

Every request made for a service, regardless of type, it intercepted

by the Client Utility enabling redirection and interposition. Thus, a

service handler may create a proxy that is close to the client, an

invocation stream may be forwarded to a transducer, or a

client-specific workflow engine or error handler can be invoked.

Thus, a display interposer could take the X-message protocol and

display it on a handheld device that only understands a proprietary

graphics device protocol. It is not just that the model allows

interposition, but it can often be provided dynamically as a

value-added service. Thus, an independent vendor could dynamically

add a commercial service that transducts the X-protocol to Java AWT.

Secure Interactions :

Security and safety of client interactions with resources and resource

providers is ensured by the infrastructure. Capability based

mechanisms, which have long been demonstrated to scale well, are used

to secure access and use of resources. The infrastructure does not

itself handle the chore of authenticating individual clients and

users, but instead allows for "plugging in" various services

that provide the required authentication and certification. The core

services only recognize capabilities; conversion of passwords or

smart-card accesses to capabilities is provided through the

authentication and certification services, mentioned above. These

mechanisms allow the core to restrict client visibility of resources

to only the set that it has capabilities for or could get capabilities

for indirectly. It is important to note that the infrastructure only

provides the mechanisms for maintaining security; the actual policies

are outside its domain. Configuring the permissions for resources in

the right way makes it easy to support roles (Sometimes a user is a

manager, other times an engineer.), compartments (When you are working

on project A, you can't see anything related to project B.), military

style security like that in the "Department of "Defense Trusted

Computer System Evaluation Criteria", commonly referred to as the

Orange Book. (Someone with Secret Clearance can not read a Top Secret

document.), etc.

Introspection:

Since every service request is intercepted by the Client Utility, the

interaction between clients and services can be examined closely and

manipulated by higher level APIs. A system administrator of a large

utility may decide to change dynamically a file mirroring site, modify

security policies, and so on. All these are enabled by allowing

trusted entities (programs and end users) to use the Client Utility

APIs that deal with resource meta-data, such as names, permissions,

and the like. It is expected that higher level management APIs that

construct various management tools or aggregation abstractions such as

the virtual personal computer, will be the main customers of these

APIs.

The entities that the core services deal with are components of

all application-specific (or domain-specific) interactions. These

entities are dealt with by the core services in a generic manner, and

consequently higher level management abstractions can reason about and

manipulate client-service interactions uniformly though the use of

introspection APIs. Also, the conversion to a generic interaction

protocol relieves clients and services of dealing with naming

mismatches, interface variations, and the like, that invariably occur

in the interactions between independent components. In this sense,

the OS not only acts as a substrate for building higher-level

paradigms, such as utilities and virtual personal computers, but also

simplifies the construction, deployment, and management of networked

clients and services.

Support software in the Client Utility architecture falls into

three specific categories. Emulation software allows existing legacy

environments to benefit from the functionality provided by the client

utility middleware implementation. For example, emulation may enable

Microsoft Word '97 to access seamlessly remote UNIX files. Similarly,

NTFS files may be seamlessly exported to the utility and made

accessible to client applications on non-NT machines.

General form a small list of meta-services such as metadata

repositories, directory, and authentication services which are

necessary to build complete systems. They allow the client-utility

systems to bootstrap and use existing industry-wide standard

functionality as far as possible. Domain specific services include

all the services and functionality needed to implement specific forms

of utilities. Hence, a utility supporting e-commerce could include

contract maintenance services.

The Client Utility would be useful but quite limited if it didn't

include a means to deal with other machines. In fact, the main reason

to implement the Client Utility is the secure, transparent sharing of

resources that it provides. A utility can be as small as one machine,

but it has been designed to scale to the size of the Internet. A very

large scale system can only perform well if it doesn't rely on any

centralized services. Hence, the Client Utility is designed so that

all connections are pairwise, all scheduling decisions are made

dynamically at resource request time, and no connection hierarchy is

imposed, although one can be constructed if desired.

It is unlikely that a very large utility will be made up of

machines of the same type. Indeed, we expect a utility to be made up

not only of computers of incompatible architectures, but to include

devices of various capabilities. Today, these devices are likely to

be printers and scanners; tomorrow they are likely to be cellular

phones or even light switches. In order to deal with this diversity,

the Client Utility only requires that communication between machines

obey certain conventions. No machine ever attempts to look inside

another.

If a light switch makes a request of a clock, there is no reason

for the clock to know what operating system is running on the light

switch, what user is making the request, what accounting structure the

light switch uses to manage its billing policy. All the clock needs

to know is that the light switch wants to know what time it is and if

the clock has agreed to give the light switch the time of day.

An important implication of this decision is that a machine can

choose how much of the Client Utility infrastructure it runs. If all

it wants to do is use resources from other machines, it only needs

sufficient code to send the proper requests and handle the replies.

If it wants to let others use some of its resources without using the

Client Utility security infrastructure, it can respond to requests as

specified in the protocol. This approach also means that machines can

be using different versions of the Client Utility protocol; all they

need is a common dialect to enable them to share resources.

Since machines can connect to each other in a completely

unstructured way, what constitutes a utility? We believe a utility is

defined by the set of machines that share an administrative domain.

Since, as we'll see, each machine decides exactly what part of its

resources it will let other machines use, it can choose to give

administrative control over certain resources to another machine. A

set of machines sharing a common administrator constitutes a utility.

This administrative domain is completely independent of the way

machines connect to each other. Machines within a utility may choose

to share more resources with other machines in the same utility than

with those in other utilities, but this decision is one of policy, not

architecture.

The protocol used by Client Utility machines has several stages -

connection,

authentication,

exchange of resource descriptions,

use of remote resources,

and

failure limitation and recovery.

Each of these components was designed to be consistent with the

scalability, heterogeneity, and security requirements of a large,

distributed system.

The first step in participation in the Client Utility is to find

one or more machines to connect to. The list of machines could be

provided by a system administrator, a list of participants could be

kept by some directory service such as Yahoo, or the machine could

broadcast a request. However it is obtained, the machine now has a

list of other machines to talk to. Since all connections are

pairwise, we need only describe a single connection.

The information needed to establish the connection is contained in

a Connection object that was prepared by the machine being contacted.

It specifies everything needed to communicate with this machine, such

as communications mode (HTTP, TCP, UDP, ...) and contact port (if

appropriate) as well as things about the machine, such as its

endiannness.

A machine running the full Client Utility infrastructure will

start a new task running in a very restricted protection domain, a

sandbox that makes available only the resources the task needs. This

task is only allowed to talk to the other machine over the agreed upon

channel. All communication from a specific machine is mediated by its

proxy. These proxies will negotiate a dialect of the Client Utility

protocol that both machines understand. In this way machines running

different versions of the protocol can still communicate. If they

don't have a dialect in common, they will terminate the connection.

The first thing the machines will do once they have determined a

common dialect is to authenticate each other. What constitutes

authentication determines the context. Two machines within an

enterprise can prove to each other that they do indeed belong to the

same company. Once that is done, they can share resources they

wouldn't want seen by outsiders. If they are communicating over an

insecure line, they can also establish a session key so that all

messages can be encrypted.

In a commercial setting, authentication may not require

identifying the machine or owner of the machine. Instead, it may make

more sense to establish an ad hoc contract. For example, a

customer may supply a credit card number or prove possesion of a

digital wallet acceptable to the vendor. In these cases, the service

provider is not authenticating the buyer, only the party guaranteeing

payment, a scheme that greatly simplifies the model. It is also

reasonable to defer the authentication, allowing the other party to

see only publically available resources such as advertising.

2.2. Abstractions

2.3. Core Services

/usr/bin/winword,

whereas the service actually exporting Microsoft Word from another

machine in the utility could actually have it stored as

c:\winnt\system32\WinWord.exe.

2.4. Support Software

2.5. Intermachine Protocol

2.5.1. Connection

2.5.2. Authentication

2.5.3. Exchange of Resource Descriptions

Once the machines know who they are talking to, they can decide what resources they are willing to make available to users on the other machine. This list constitutes a policy determined by the administrator of each machine. Few resources will be exposed to machines that haven't established a high degree of trust; more resources will be shared with those that have.

The proxy on the machine that owns the resources will ask the Core to add name associations for the resources to be exported to its protection domain. It will then send a description of each resource to the other machine. When it receives a description of a remote resource, the receiving proxy will validate whatever part of the resource description it understands. Next, the receiving proxy will ask its Core to register these resource naming itself as the resource proxy.

When the exchange is complete, the sending proxy will have a protection domain consisting of the minimum set of resources it needs to do its job, all the resources imported from the other machine, and all the resources exported to the other machine. The Core will have added entries for all the resources its proxy imported. Any use of these resources will be forwarded to the proxy as the designated resource proxy.

Tasks add resources to their protection domains by telling the Core the attributes of what they want. There is no need for the applications to know the source of these resources. They may be local, or they may be remote. If they are remote, any request to use one will be forwarded to the proxy for the machine that owns the resource. Even the Core is not aware that the request is for a remote resource. As far as the Core is concerned, the proxy is just another resource proxy. When a proxy executes a command on behalf of a task on the other machine, the Core sees a local request. This time the proxy looks like any other local client.

The proxy for another machine can be intelligent and partially process the request, or it can merely forward it across to its counterpart on the other machine. That proxy can also do some processing of the request, or it can attempt to execute the request. When it does, it talks to the thread that is acting as its client proxy for the Core. The request gets marshaled into the proper format and forwarded to the Core. At this point, the Core sees a normal request from a local task. It can do all the checking it normally does without knowing anything about another machine.

The proxies not only isolate the Cores from each other, but they also provide the opportunity to do some filtering. For example, it may be corporate policy to do certain functions only on machines within the enterprise. Since the proxy sees the payload, it can redirect the request to a proxy connected to an internal machine. In this way it acts like a firewall.

Some requests may involve resources from a variety of places. For example, if a task runs a word processor on another machine with a file from the first machine, we need some way for the word processor task to have permission to read and write the file. Clearly, the resource description was exported to the machine, but the task running the word processor needs to have a name for the file. We handle this situation by having the proxy transfer parts of the protection domain to the proxy on the other machine.

When a task wants to start a job on another machine, it transfers resources to the proxy for the other machine. (Of course, we use our security mechanisms to make sure that the proxy doesn't get access to resources that shouldn't be available to users on the other machine.) The proxy forwards the request across the wire along with the resources the requester transferred as part of the request. The receiving proxy registers these resources with its Core and adds them to its protection domain. Finally, it executes the command. If the program needs resources from both machines, the two proxies must impersonate the requesting task.

A single proxy can represent many tasks by getting a number of distinct protection domains from the Core. Each one will have the set of resources common to both the requester and the machine running the command. The Core need not be aware of this schizophrenia of the proxy or any other task, for that matter.

Components will fail. These may be hardware failures, such as network links and disk drives, but software failures, such as operating system kernel panics and memory leaks, are also common. It is important to discover such failures and limit their effects. Well-behaved components are expected to report failures they detect to a designated agent. This agent will make inferences about the cause of the failure using information collected from many sources. If this agent can determine the cause of the failures, it can direct components to take action to limit the spread of the failure. Well-behave components are expected to accept such instructions from their designated monitor.

As an example, consider a machine that provides a copy of a particular file that is used by a number of applications. If this machine fails, applications asking for the file will experience a failure. They may have an alternate source for the file, so they need not elevate the problem to the user. However, the extra overhead involved in identifying the failure may be substantial. For example, they may have to wait for a time-out to expire before going to the alternate source. If these applications report the failure, the monitor receiving the information can infer the source of the problem and tell other applications to go directly to the alternate source. Further, the monitor can tell the non-responsive machine to take corrective action such as rebooting.

The goal of the failure limitiation architecture is to use information from a number of sources to limit the affect of a failure in the system. In many cases, a failure that would be elevated to the user can be repaired without adversely affecting running components. At the very least, the number of components affected by the failure can be limited.

Architectural principles that apply within a machine

The Client Utility consists of a small set of services that communicate with user tasks and resource proxies via messages. These services are denoted by the term Core, a term chosen to avoid confusion with the term kernel often used to describe the trusted part of a conventional operating system.

The only services provided by the Client Utility Core are name resolution, extraction of resource specific data, message routing, and a means to monitor and manage the system. All other services are provided by resource proxies written to understand the management of specific kinds of resources, such as files, memory segments, processes, etc. These resource proxies can be separate user-space processes, but trusted services can run in the same address space as the Client Utility Core.

In order to avoid confusion with terms such as System, Machine, or Processor to designate the domain of control, we will use the designation Core. Resources within a Core have unique designations; each Core has a Repository listing all resources that it knows about; each Core has the 4 basic components.

Although it is most commonly the case that there is a single Client Utility Core running on a single machine, the architecture is more general. We can have one Core running across several machines, whether they form a multiprocessor or are network connected. We can also have more than one Core running on a single CPU. This latter configuration is useful when we need to repair a machine yet present the view to the users that it is running.

This overview provides an introduction to the Client Utility architecture. Many of the special cases have been ignored in order to keep the discussion straightforward. In particular, we will describe only a single Core running on a single machine with only one CPU.

Fundamental to the Client Utility is the fact that virtually everything in the system is treated as a named resource. These resources include such familiar things as files, processes, and memory segments, but also may include resources that are not usually named. For example, it is common to name a data base, but the Client Utility also allows naming of data base records. The Client Utility also names resources that don't exist in other systems, such as name spaces and key rings. The Client Utility Core maintains a Repository which lists all resources known to this Core. Each resource has a name unique to this Core, a Core Repository Handle (CRH). A Core repository handle is never used outside the Core.

The Core can only deal with resources having entries in its repository. However, the Core, as a policy decision, may delete repository entries any time it wishes. Hence, a small machine can continue running even if it is too small to hold all resource descriptions exported to it. The Client Utility makes provisions for external agents to provide resource descriptions that the Core has chosen not to keep. Hence, an application referring to a resource description that the Core has deleted can still continue running, although it may suffer a preformance penalty.

Each request from a task for a system resource is made via a message from the task to the Core. A message consists of an outbox envelope, which is read by the Core, and a payload, which is read by a resource proxy, a task which will process the request. The router component of the Core constructs an inbox envelope and forwards the rewritten message to the resource proxy which is responsible for interpreting the message payload. A task can grant access to a resource to the recipient by including it in the message envelope. Unless there is strong authorization on the resource, a corresponding entry will be put into the inbox envelope. In this way, the sender can give the receiver a place to send replies; it includes a resource naming the sender as resource proxy. The same mechanism allows the sender to pass parameters to the receiver even though they don't share names for the resources.

Since the payload is defined by a convention established between a task and a resource proxy, we say that each resource speaks a specific language. (A language in this context is simply an API plus Client Utility specific information.) Thus, a file resource proxy speaks the file language; a scheduler speaks the scheduler language; etc. There is also a Client Utility Core language used to manipulate Core data structures. The Core never looks into the message payload unless the message is in the Core language. The Core knows nothing about languages other than the fact that they are unique within a Core. Any task can register a resource that represents a language and give itself ownership rights. The Core will make sure that this resource can be uniquely identified.

The Client Utility separates the concepts of naming and permissions. Each task works within a name space that it is free to define. Each name in this name space is bound to zero or more Core repository handles and an optional attribute description. Permissions are defined by the set of keys held by the task. Thus, access to a resource is controlled both by associating a task specific name to a Core repository handle and by controlling which keys are transferred to the task.

Three kinds of errors can occur when a task attempts to manipulate

a resource. If no name association exists in the task's name space for

the specified name, the task receives a

Does not exist

error. If the name exists but can not be bound to a repository handle, the task

receives a

No resource with that name

error. If the name association exists, but the task's permissions

indicate that it shouldn't see the resource, a

Does not exist or an

Access denied

error is returned depending on the reason for the denial. Of course,

the proxy responsible for this resource can return a variety of

errors, as well.

The

messaging

is straightforward. Consider an application that needs a service from

a resource proxy, say to open a file. It sends a message to the Core.

The

Router

examines the message envelope to find the named resources. For each,

it asks the

Name Manager

to find the repository handle associated with the name in the application's

designated name space. Next, the

Core repository handler

looks up the resource metadata in the repository, including

permissions that correspond to keys on the application's designated

key rings. If all has gone well, the

Router

constructs an inbox envelope which it forwards to the proxy for this

resource along with the payload of the original message. Meanwhile,

the

Monitor

has been logging what's been going on.

3.2. Walk Through

A first look at the architecture

3.2.1. Introduction to Walk Through

A simple walk through of the features of the Client Utility architecture will make it easier to understand the details. We'll use the example of opening a file since it's familiar to most readers. However, any access of any resource of any type will be handled in the exact same way.

For pedagogical reasons, we're going to hide details at each step along the way. We'll introduce them as the proper context is established. Two facts are needed to get started.

In addition, every entry in the Core Repository has a handle which we call its Core Repository Handle (CRH). Note that repository handles are unique within a single Core. No coordination of repository handles is needed between Cores, whether on different machines or on the same machine. A Core never accepts a repository handle from the outside.

The Client Utility uses a mailbox metaphor to describe the way applications make requests to access resources. The actual implementation may use mailboxes or not. In one prototype implementation, the messages are actually constructed by a thread running in the Core based on data passed from the application.

A task makes a request to the Core by putting a message in the task's outbox. This message consists of an envelope, the outbox envelope and a payload. The outbox envelope is defined by the Client Utility protocol; the payload is a convention between the requester and supplier of the service. Because of this convention, we say that the message is in a specific language. The Core will never look at the payload of the message unless it is in one of the Core languages, languages used to manipulate resources owned by the Core, such as mailboxes.

Any task can run on a Client Utility machine as long as it can do its job with the default resources. Hence, a typical Java applet will work without interacting with the Core. However, any task that needs more resources can get them only from the Core.

Most operating systems have facilities that allow the Core to start all tasks. In this situation, the Core will establish a default protection domain for every task. For example, users will log on using a logon task that the Core has started with this default protection domain. Communications with the Core are via anonymous pipes that the Core created when it started the task. The Core uses the pipe it reads from as the task's unforgeable identity.

A two step process is needed on operating systems on which the Core can not start all tasks. The first thing the task needs to do is contact the Core by connecting to the Core's portmapper. The Core will respond by returning to the task a port to use to contact the thread acting as the task's client proxy. (Note that these ports need not be socket ports; they are merely a means to exchange messages.) The Core will also return a token for the task to present on each request. This token is an unguessable 64-bit number used as the unforgeable identity of the task for the task's lifetime. The Core will associate a protection domain with this token.

There appears to be a security hole here because there is a time interval between when the port is assigned and when the task first uses it. A malicious task could come along and steal the port at this time. However, nothing is gained in this attack because any task will be given the initial resources, malicious or not. Once the connection is established, the token is used to authenticate any future requests.

The differences between these two means of connecting to the Core is hidden in the library routines provided on different operating systems. The client always uses the API to connect to the Core, and the library routine uses the proper messaging scheme. In this way, a single application can run on Cores that implement different messaging layers.

The initial protection domain built by the Core will include exactly enough resources to allow the task to request more resources from the Core. The task will then begin by adding resources to its protection domain, either by making general requests or by authenticating itself and getting resources belonging to its account.

Let's look at a typical request, say to open a file, made by a task that has been running for a while. I call this file my.addresses, but I may not want anyone else to know the name. After all, my name might be the_boss_is_dumb. Hence, I want file system to see an alias, say the_boss_is_smart.

Back to reality. The task will construct a payload in a language known to the file system, say

open read address.bookand an outbox envelope. One of the things the task puts in the envelope is a name field for the resource. A name field contains the task's name for the resource, my.addresses, and additional information described later.

When the message is delivered to the Core, it looks in the envelope to extract the task's identifier token and to see the name of the resource being accessed, my.addresses in this case. The task's identity, determined either by its communications channel or its token, is associated with a protection domain which, in turn, specifies a name space. The Core looks in this name space to find the repository handle associated with the task's name my.addresses. If no name association is found, an error is returned to the task stating that the resource doesn't exist.

If a name association is found, the Core looks in the Repository for the state information associated with this resource. One such piece of information is the resource proxy for the resource. This proxy is designated by a mailbox attached to a task that understands the language of the payload.

Another field in every repository entry contains resource specific data. This field has two parts, one private to the resource proxy and the other available to all tasks. The private data contains any data the resource proxy may need to deal with requests. It usually includes a set of permission fields made up of a lock and an associated permission. Each request contains a list of key rings, each of which holds a number of keys. The Core will match up the keys on this key ring with the locks in the permission field. Any permission associated with a lock that gets opened will be forwarded to the resource proxy. Note that the Core never looks at the resource specific data, it just passes it on to the resource proxy. In our example, the core might extract the strings read and write.

The public part of the resource specific data field contains any data that the task registering the resource feels tasks might need to know. For example, the public field might contain a digital certificate identifying the supplier of the resource. It might also contain a piece of code, like a Corba stub, that the task can use to invoke an operation on the resource.

The Core will now construct a message to be delivered to the resource proxy. This message consists of the unmodified payload and an inbox envelope. The Core will put a name for this resource in the inbox envelope. In addition, if the outbox envelope specified a label for the resource, say address.book, the Core will insert that value into the label field of the inbox envelope. This label can be used by the task and resource proxy to mutually identify the resource.

The inbox envelope will tell the resource proxy the resource specific data and the name for each of these resource. The association between these names and the fields in the payload is part of the language specification agreed on by the requester and resource proxy. The semantic content of the payload and the resource specific data is the business of the resource proxy, not the Core.

The resource proxy can now do its job, except for one thing. How does it know what file in the underlying system the requesting task is talking about? Fortunately, there is no problem if the repository entry is configured properly. The resource simply gets registered with this information encoded in the field containing resource specific data. In our example, this name could be /u/joey/addresses.

The resource proxy now knows that the request is to open file /u/joey/addresses and that the requester has the permissions associated with the strings read and write. It can now access the file system to open the file, but what does it do with the file handle? How does it get the handle back to the requester? It could ask the Core, but the Core doesn't keep any information about message-reply status. Instead, we use a different scheme.

Another field that can be included in the outbox envelope constructed by the requester includes a name association to be transferred to the message recipient. Except for being used to find a resource proxy for the message, this name field is identical to the primary one. It has the requester's name for the resource and an optional label.

In our example, the requester would specify a resource for which the requester is the resource proxy. By convention, the resource proxy knows that the second name field in its inbox envelope refers to a resource to be named to send a reply. The resource proxy can now put the file handle into the payload of a message and the outbox envelope can specify the name associated with the reply resource as the primary resource. The Core will then use the same procedure as for the original message to deliver the reply.

Since the Core decides what name goes into the recipient's name space, it can not be sure it won't specify a name already in the recipient's name space unless it searches the entire name space, too time consuming to do on every message. There is another problem. What if one task creates a file to be used by another task? Must one explicitly transfer a name for the resource to the other? The solution to both these dilemmas is the same - give the name space some structure.

MyNameSpace=(MyDefaultFrame,InboxFrame,FrameA,FrameB)

A name space consists of an ordered list of frames. Each frame contains the association between the task's name for a resource and a specification for the resource. Both name spaces and frames are named resources, so they can be manipulated in the same way as any other resource. Once a name association is put into a frame, the name is available to any task with permission to use the frame.

The sharing problem is solved by defining a frame that both tasks can use. This frame can be a global frame available to all tasks, which gives us the file visibility of conventional systems. A global frame is usually built during system start-up and put into the name space of every task. On the other hand, this frame could be shared by all tasks in a single login session. It is built as part of the login process and given to all tasks in the session. Finally, the frame can be constructed at the request of a task and shared by the usual means. Once both tasks share the frame, any name put into the frame is immediately available to both tasks.

We also use frames to avoid the name multiplicity problem. Each mailbox has a frame associated with it. When a message is delivered, the names of the resources being transferred are put into the frame attached to the mailbox. The recipient can use these names by including the frame in its name space, or it can copy the entries to another frame with the same names or different ones.

Frames are useful for handling other kinds of name multiplicities. If a task is collaborating with two users, say chuck and sally, it can decide which task's names take precedence. By putting sally's frame first in the name space, a task can assure that it will see a name association in chuck's frame only if it doesn't exist in sally's.

A task can construct many name spaces. An entry in each name field in the outbox envelope is used to tell the Core the name of the name space to use for that resource. Hence, a set of resources can be hidden for the purpose of a particular message by not including the frame containing their name associations in the name space.

Building name spaces can be quite onerous if the task has to list explicitly every frame to be included. In particular, we can envision getting a large set of frames from a name mapping service. Building a name space by listing all these frames is prone to error and makes it difficult for the provider to make changes. We solve this problem by having each frame specify an ordered list of child frames. A task can now build a name space by simply listing a modest number of frames and a set of traversal rules, say depth first, and stopping criteria, such as not including child frames from certain sources. Once built, the name space can be used many times.

All this is great, but where does the Core start the lookup? If everything is a named resource, where does the name recursion end? It turns out that this bootstrap is straightforward because each mailbox has an associated frame. We call the frame associated with a task's outbox its bootstrap frame. Some names are put into this frame when the task checks in. Among them are names for a mandatory key ring and a default name space. The task is free to change these names, but these resources will still be used to begin the name look-up. The mandatory key ring is presented to the Core on every request; the default name space is used whenever a name specification does not designate a name space.

When a message is delivered to the Core, it looks into the bootstrap frame associated with the mailbox. There it finds the RCHs for the mandatory key ring and default name space. This name space is used to find the list of frames that contain the name associations specified in the message envelope that don't designate a name space. The keys on the mandatory key ring are used to check the permissions to these resources. That's all there is to it. The Core can now search through the frames in the designated name spaces using the keys on the designated key rings.

We now see the only resource in the entire Client Utility that isn't accessed by specifying a name, the task's outbox. This resource can't have a name because the Core has no place to look for the name association. It is this feature that grounds the name lookup recursion.

So far we've acted as if the application's protection domain automatically contains names for all the resources the task will ever need. Clearly, this isn't the case. We need some way for a task to add name associations to its protection domain. One approach would be to ask another task to transfer the name association, which we allow, but we also allow a different approach.

Each entry in the repository has an optional set of attributes. A task can add name associations to its protection domain by asking the Core to give it associations for all the resources that have certain properties defined by their attributes. All resources that match the request get bound to a single name in the requester's name space.

Defining a single attribute vocabulary for all uses and all times is not a good idea, even if room is left open to extend it. Instead, we'd like to let the attributes, like the resource specific data and message payload, have a syntax and semantic content that the Core does not in general understand. We provide for this case by allowing any task to create a new attribute vocabulary that the Core will use when machine requests against resource attributes. These vocabularies are named resources and can have attributes of their own. The recursion is grounded by making a Core vocabulary available to all tasks.

A vocabulary consists of a set of rules. There are a set of name-value pairs. Associated with each name is a value type. For example, TITLE might be associated with a string while SIZE is associated with an integer. The vocabulary also contains a matching rule for each field. For example, a shoe size vocabulary might match a request for a 10C shoe with a 9D. It is also possible for a matching rule to denote other vocabularies that it can match. For example, a US shoe size vocabulary could match entries in a European shoe size vobabulary.

Since the Core will be using the vocabulary to match the attributes of resources registered in the Core repository, we can't let it run code provided by client tasks. Instead, we provide a toolkit consisting of basic data types, comparison operations, and logical combinations. A vocabulary specification language is part of the Client Utility specification.

There may be times where we want to do a look-up in a different vocabulary. In this case, we can provide an attribute vocabulary matching and/or translating service. The request takes the form of a message that is routed to the task doing the translation with a payload containing the request. The translator can now return a new look-up request in the new vocabulary.

It is important for security reasons that information not be leaked between the owner of the resource that defines its attributes and the requester. We don't want a malicious task to know all the attributes on a resource because the information can be used for an attack. We don't want a malicious provider to garner information on the task's resource requests. In addition, we want the requester to decide what constitutes a match to protect against an unethical supplier that gets its resource heavy usage by saying it matches every request. On the other hand, we want the resource owner to determine what constitutes a match in case one of the attributes specifies a password. The Core, the only component that sees both the attribute description in the repository and in the look-up request, does the matching. The matching rules require that both the matching rules of the look-up request and those of the resource specify that a match has occurred.

Attribute descriptions can be included in a name association along with repository handles. Hence, a name association can be

What if we want some more advanced forms of security? For example, audit trails are one of the easiest and most critical steps in dealing with attackers. Also, you may have noticed that the Client Utility Core doesn't have the concept of authentication, not even something as simple as passwords.

We allow for these extensions by having a field in the repository entry that lists resources that act as authorizers. If the authorizer is just building an audit trail, the Core forwards a message to the authorizer when it puts a name association for the resource into the frame associated with the recipient's mailbox. We call this a notify authorizer. We can also designate the authorizer as being a grant authorizer. In this situation, a name association for the resource is not delivered. Instead, the recipient is told that delivery is pending. The partial association given the name does not allow the name to be used, but it does contain information on how to complete the association. Only a grant authorizer for such a resource can forward a name association for it to another task.

Authorizers let us implement some interesting functions. As noted, we can build an audit trail of what task was granted what name association when. We can also use a notify authorizer as an interface to a system monitoring tool. For example, if memory segments are treated as named resources, we can display the memory usage on a task by task basis by tracking the transfer of name associations to memory segments to the user tasks.

Here's a rather extreme possible use. Say that we make a time slice a named resource. When the scheduler swaps a task back in, it gives the task a time slice. If this task needs a service, say to do a message/reply with another task, it can transfer a name association for the time slice along with the message. Making the scheduler a notify authorizer allows it to know that the message recipient should be scheduled next. The reply can send back the name association for the time slice. When the time slice finally expires, the scheduler can create a new time slice and give it to some other task.

Grant authorizers also have many uses. If a resource is to be protected with an access control list, the grant authorizer can assure that the resource is only made available to tasks in the list. The requesting task's identity can be determined in many ways, one being authentication carried in the message payload. We also use a grant authorizer to handle password protected resources; the grant authorizer gets notified when the partial association for the resource is to be completed. The authorizer can then check the message payload for the proper password. Actually, the authorization can be arbitrarily complex, including challenge response sequences.