Problem: edges and other abrupt color variation

Problem: edges and other abrupt color variation Problem: edges and other abrupt color variation

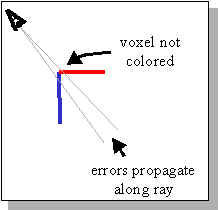

Problem: edges and other abrupt color variationWhere there is a large and abrupt color variation on a surface, for example at an edge, the corresponding voxel in the reconstruction projects onto pixels with a high color standard deviation. Hence, voxel-coloring fails to color the voxel for any reasonable color threshold. Worse, the occlusion bitmaps are not set correctly, so no voxels can be colored along the rays from the camera centers through the voxel. Thus, errors propagate.

For reconstructing scenes with large, abrupt color variations, we modify the criteria for coloring a voxel. We color a voxel if either:

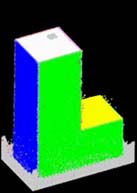

We ran voxel-coloring on a set of synthetic images of an L, each face of which is colored with a different color from the corners of the RGB color cube. The image below on the left shows the reconstruction produced using the original algorithm; the image on the right was produced using the modified coloring criteria.

This is a generalization of the edge problem described above. Some surfaces in a scene can have a relatively high spatial color variation while others have a relatively low variation. If the color threshold is set low, voxel-coloring fails to color the areas with high variation. On the other hand, if the threshold is set high, small color variations are ignored, resulting in cusping in the areas with low variation. (Cusping is a distortion in which a surface in the reconstruction is warped toward the cameras, relative to the actual surface.)

Compute the color variation among the non-occluded pixels in the voxel projection in the individual images. Increase [decrease] the color threshold when these values are high [low]. For example, we have tried a threshold that is proportional to the mean of deviations in the individual images; we have also tried a threshold that is proportional to the minimum of the deviations.

Our early experiments with voxel-coloring compared the color of voxels using Euclidean distance in the RGB cube. This caused two kinds of problems.

First, the RGB space is not uniform in the following sense: two colors that we can easily distinguish can be quite close together in RGB space if they are dark, whereas two colors that are hard to distinguish can be relatively far apart if they are bright. Consequently, it is often not possible to find a color threshold which works well in both the dark and bright parts of a scene. The CIELab color space is designed to be perceptually uniform, which suggests that it might allow good reconstruction in both the bright and dim portions of a scene with a single color threshold. In experiments, we indeed obtained better results using CIELab with some scenes.

Second, some image sets have inconsistent lighting, for example those obtained with a fixed light source and a subject rotating on a turntable. As a result, a point on a surface can project to different colors in different images and fail to be reconstructed. Using a color space that separates chromaticity and luminance, we can construct a color distance measure that weighs luminance less than chromaticity, thereby minimizing the problem of inconsistent lighting.

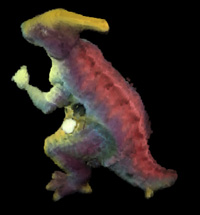

The reconstruction below on the left was created using the RGB color space. The color threshold we used was too low to produce a good reconstruction of the wrist. Thresholds which were high enough to reconstruct the entire wrist caused significant distortion elsewhere. The reconstruction on the right was created using the CIELab color space. Using CIELab, it was possible to find a threshold which produced a good reconstruction of the wrist, as well as the rest of the dinosaur.

Back to top.